No-Frills GNU/Linux:

Philosophical Foundations

For the reader in a hurry, here's an overview with hyperlinks to the relevant sections, further down the single ultra-long page (you're reading its initial paragraph now) that display this fourteen-thousand-word essay.

The first section, 'Taking Inventory: Frillies in the Biosphere' and the second, 'Taking Inventory: Frillies in Computing' together introduce the thesis that the problems we face in our deteriorating physical environment are paralleled by problems in the virtual space of software.

The grim thesis is taken a step further in the next section, 'Frillies, Tainter's Spiral, and Societal Collapse': so severe are our software problems that our situation is (not just uncomfortable, but, more radically) unstable, as the late Roman Empire was.

A ray of hope is first offered in a section entitled 'No-Frills GNU/Linux: A First Look', then put into the wider history-of-computing context in a section headed 'No-Frills GNU/Linux, Unix Permaculture, and Noosphere Conservation'.

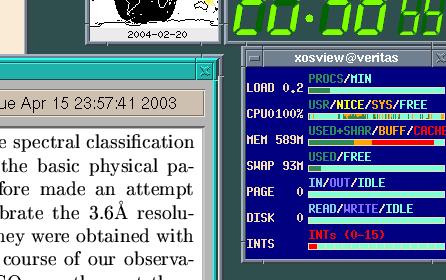

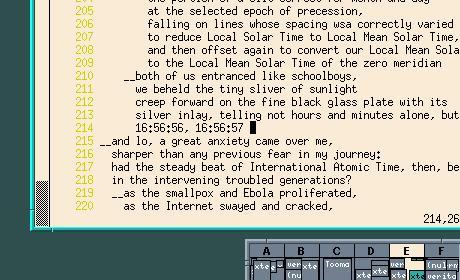

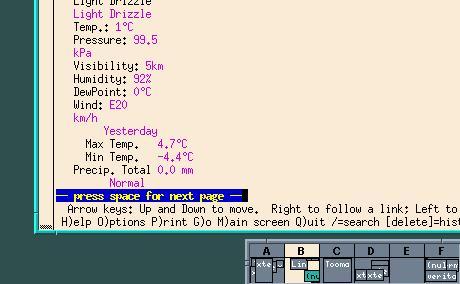

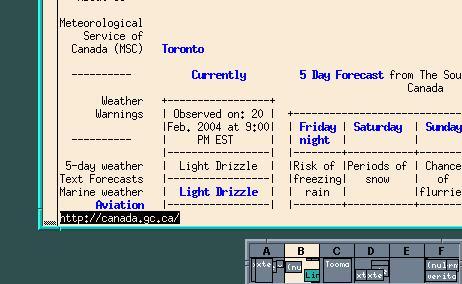

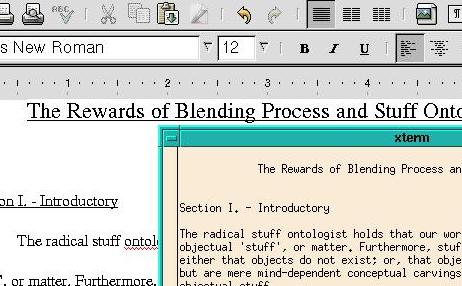

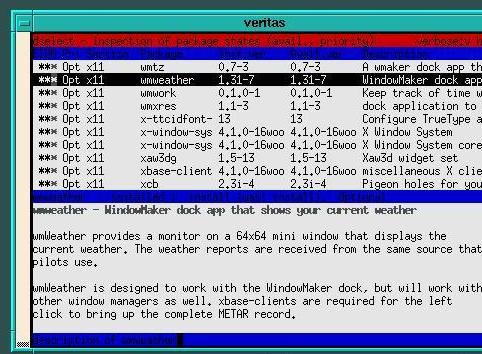

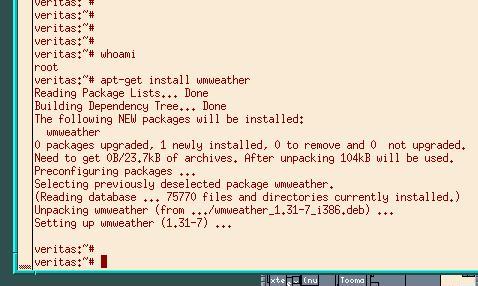

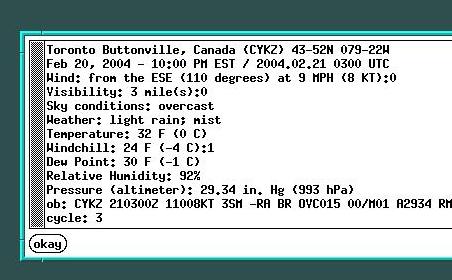

The long final section, 'No-Frills GNU/Linux in the Noosphere: Details from a Debian Implementation' explains in concrete terms, with many a screenshot, many a real-life example, how a permaculturist, deeply noosphere-green, computing philosophy may today be implemented. The essence of the long story, in essence a tour of the author's own workstation environment, is that we do well to avoid pointless software elaboration. We should, instead, harness within the X Window system the archaic power of the command-line interface. Robust contemporary incarnations of the 1980s glass teletype are lauded, notably in the context of a real-life Debian software-discovery scenario, essentially as lived in 2003 by the delighted author himself.

The essay is respectfully dedicated to the Linux Users Group of Iraq (http://linux-iraq.org/), a community working in difficult conditions for a worthy cause.

Taking Inventory: Frillies in the Biosphere

Our first task in developing a green computing ethos is to inventory current infotechnology's less helpful practices.

As a warm-up to this task, let's compile a list of the pernicious stuff, the frillies, that so many clear-sighted environmentalists are working to purge from the non-cyber world.

Rather obviously, we have the quarter-pound bovineburgers and the accompanying non-compostable cups of brown fizzwater, the disposable cameras and the plastic flowers, the SUVs and the suburban shopping malls - in short, the directly tangible end-user dreck from the final decades in our waning Epoch of Cheap Fossil Fuel.

A little less obviously, we have most cinema and television, including not only our entertainment, but even our television-adapted news and most of our documentaries. There are two reasons why filmed news and documentaries are, as a general rule, to be shunned.

First, since ordinary cinema and television lack bibliographic apparatus such as footnotes, we cannot check the authority for assertions made. That leaves us open to manipulation. Multimedia manipulation can take subtle forms. The FOX television interests allegedly forced their writers to use some such term as 'human health hazard' instead of 'cancer' in reporting on chemical supplements for the American dairy herd. And who, having beheld it, can forget Leni Riefenstahl's clever insinuation of a Church-and-Party nexus in her Hitler-sponsored documentary on the 1934 Nüremberg rally? First, the German cinemagoers saw the conical camping tents of a Dritte Reich youth movement. A second later, they saw the conical spires of some local church or cathedral. Since there was no explicit claim about the Church in the sound-track commentary, there was nothing any jurist could ever prove to be a lie.

Second, cinema and television do not encourage us to keep written records, to compare two screens simultaneously, or in any other way to activate our intellects.

Here's how I imagine them working up to an appreciation of that second point in the Halls of Hell:

Junior Demon (deferentially addressing the Father of Lies): Well, your Bad Eminence, we've come up with something new. Late in the evenings, we're getting explicit sex into every parlour in the land.

Father of Lies (rather bored): Sex. You guys have been working

on that for a while, if memory serves. Weren't you going to do

something way cool, like totally k3wl d00d,

with those Biblical harlots?

Junior Demon: But wait - there's more. All round the clock, all the time - over and over, to make viewers stop finding it strange - we show something people - even cops, even soldiers - seldom see in their real lives, people assaulting people to the point of haemorrhage. Ketchup City. Fiction and non. With fist, blade, or bullet, as the screenplay may demand. Sometimes fatally, other times not. Sometimes squirting from arteries, even from the pericardium, but more commonly oozing into light-coloured clothing from handy veins. Sometimes, not always, forming a puddle on floor or ground. Ketchup in the cartoons, ketchup in the news, ketchup and uniforms on the History Channel, ketchup and fine diction on Masterpiece Theatre.

Father of Lies: Yeah, okay: ketchup-vision privs, I presume, for docile prisoners who follow the admin's rules? Ketchup in the maternity ward, glowing sweetly on a little rentable bedside box? Ketchup in the psychiatric ward?

Junior Demon: Yuppers, yea verily. Also ketchup for judge and counsel, unwinding after their day of Law. Ketchup-vision for firemen, for paramedics, as they sit around their stations waiting for callout. Nonstop ketchup with fists and guns on big, bright communal screens in the nursing home. Ketchup in places you would, like, not believe could ever opt for ketchup. Ketchup-on-VHS, ketchup-on-DVD to reward the scientist after a hard day of duty in Antarctica, or maybe on the ISS. Soon we'll get a 24-Hour Ketchup Channel into Carmelite convents.

Father of Lies (paying a grudging compliment): You know, that's actually kind of ... original.

Junior Demon: But lissen: it gets better. We've trained everyone to absorb information in a stream. A stream, get it? Stream as in passive? Not reading, but being read to. Not observing, but being shown. No pulling, just pushing. It's a stream, get it? Somebody says something important, some world expert on Oprah or the Discovery Channel pushes some significant content at you, and you - you aren't given time to write anything down! The camera rolls, the stream don't stop. You one-quarter learn, you one-tenth learn, all kinds of stuff, but you never really learn anything. Not even how your government makes laws. Not even how to catch a fish. Not even what, ahem, might cause Attention Deficit Disorder in preteens. Cause as soon as we push one bit of content at you, we push the next bit, and then the next and the next and the next and the next and the next and the next.

Father of Lies (now impressed): Hey, this actually is getting original. Lick our dust, Sophocles.

In agribusiness, we have seeds whose seedlings are engineered to produce only infertile seeds.

In business at large, we have the concept of a corporation as a legal person, distinct from the human beings that make it up. And not only that concept (recently pilloried in, as the irony of events would have it, an influential Canadian piece of documentary cinema): we must also relegate to the junk heap of history the very idea of a "business relationship", as distinct from an ordinary human relationship. It's an idea that notoriously licenses a kind of double standard, allowing us to practice on, or obligating us to accept from, clients species of insincere self-serving warmth - or, as predictable sequelae to insincere displays of friendliness, species of power-playing coolness - not countenanced among neighbours on a decent street.

Then, again (you knew this would come sooner or later) war, in any of its forms. Again (this, too, you knew would eventually surface on my list) much, albeit not all, government.

You will say, on surveying my rather alarming inventory of junk outside cyberspace, that I am urging some combination of Amish values with anarchy. You will be right in so saying. Admittedly, I'm radical Catholic, and so stand to some extent apart from the admirable radical Protestantism that sustains the Amish. I depart from Amish thinking at this point and that, for instance in having no objection to electric appliances. They're fine, say I, provided they draw their power from solar cells, from wind turbines, or from environmentally gentle forms of hydro.

Horrified as you are by my list, you will not now be able to stop reading. Aware, then, that you're hooked, I proceed to inventory the counterproductive stuff in computing.

Taking Inventory: Frillies in Computing

(A) First among cyberfrillies comes e-mail spam. 'Nuff said.

(B) Second come the games.

'Nuff said? Well, maybe a note could be added on games, so rich in sound-effects and (such are our expectations from television) ketchup. A Web designer and I once had a really good chat about games. We thought we should develop a novel one, to be marketed as 'Civilization'. Our envisaged game, unlike its competitors, would address civilization in depth. Nothing whatever, of course, would happen. The players would take interminable cyberwalks between impeccably pruned hedgerows in rolling green English cybercountryside, or else would doff their virtual tweeds and sip cyber-Darjeeling or cybersherry in oak-panelled rooms. Players could lose points for such solecisms as picking up the wrong cyberfork. (I gather forks work like this, that they give you a teeny-weeny outer one for the opening filet-of-sole and an approximately equally teeny-weeny inner one for the concluding crêpes. Isabella Beeton, born 1836, departed this life 1865, remarked that whereas the beasts of the wild eat, people dine. In playing 'Civilization', it might be handy to consult her Book of Household Management: Comprising Information for the Mistress, Housekeeper, Cook, Kitchen-Maid, Butler, Footman, Coachman, Valet, Upper and Under House-Maids, Ladys-Maid, Maid-of-All-Work, Laundry-Maid, Nurse and Nurse-Maid, Monthly, Wet, and Sick Nurses, etc. etc.: Also, Sanitary, Medical, & Legal Memoranda; With a History of the Origin, Properties, and Uses of All Things Connected with Home Life and Comfort.) We thought this game might secure a cult following, say in the tough and mean New York punk scene.

(C) Next come Web sites that break the standards of the World Wide Web Consortium (W3C).

It's remarkable just how badly things can go wrong at what might be expected to be paragons of good Web development. At the BBC, for example, both the graphics-intensive http://news.bbc.co.uk and the computationally less demanding http://news.bbc.co.uk/text_only.stm purport to conform to the W3C "HTML 4.0 Transitional" standard. And yet when I last parsed with http://validator.w3.org/, at or a minute or two before the Universal Coordinated Time 20040224T0442Z, the former yielded 33 breaches of standard. What, then, about the simpler page, designed to display only images of journalistic import, and to load cleanly even in primitive browsers? Here the number of breaches identified was not 33, but 74.

Indeed,

all but four of the pages by authors other than me

that I refer to in this essay

fail to parse cleanly, however worthy the content

in most cases is.

I checked all of them

between 20040224T0439Z and 20040224T0449Z

(in all but the case of the W3C page simply by punching

the CTRL-ALT-V combination in the unhappily licensed,

but for this excercise convenient, Opera browser),

and checked most of them

on one or two other days too. The four pages that

did parse cleanly were, predictably,

by GNU/Linux-friendly computer gurus:

the Linux Users Group of Iraq, W3C, the Free Software Foundation,

and Eric S. Raymond.

On my own Web site, HTML code is for the most part valid,

and where valid is usually or always

explicitly proclaimed to be so with a W3C badge at the foot

of the page. There are, however, occasional

puddles of invalidity. When you read these

words, there will almost certainly be a few pages

of arguably minor journalistic import, somewhere,

to which I could not affix the badge. Worse,

there may well be a few pages

of significant journalistic import, generated by

the jade SGML/XML-to-HTML tool,

and consequently

failing to display my favoured left-margin decorations,

that likewise flunk - a grim

reminder (were reminder needed) that we

are not yet, at this point in the history of computing,

to trust all tools purporting to generate HTML.

No, folks: in the Year of Grace 2004 or so, if you want your HTML

to be standards-compliant, you still have to be careful with

automated tag-emitting contraptions. (HTML demands, rather,

hand-tagging, in a text editor like

vi or emacs, and vigilance.

Not altogether a bad thing, since

we are thereby called to exercise our neurons.)

(D) More radically and less obviously, we face junk in the guise of software that is not free-as-in-freedom, in other words software that comes with restrictions on execution, copying, distribution, analysis, or modification. Software can typically be seen to be unfree not only from restrictive terms in some End User License Agreement (as when a EULA restricts the making of copies), but also, starkly and simply, from the unavailability of source code.

Here's why availability of source code is a necessary condition of software freedom. When the distributor gives you access to the binary executable (the compiler output) without access to the underlying programmer-readable compiler-input source code, you have no guaranteed way of understanding what the software does. You must instead rely on your own testing and whatever assertions the vendor may make in the user's manual. You have, in particular, no guarantee that the software is free of Internet-ready trapdoors. And with only the binary executable available, you have no mathematically feasible way of modifying the software, except as provided for in the user interface. You have essentially, then, handed control of your computer over to the vendor.

That surrender of control is sometimes

no big deal. Sometimes, on the other hand, it's worrisome.

As when, for instance, you are a public servant, running

software tools bought out of the public exchequer, perhaps for

some high and serious purpose such as the processing

of refugee claims

or the updating of electoral rolls.

If such scenarios sound strained to you, Google under the

search phrase NSA trapdoor security. You may find,

as I did at or very near 20040223T2243Z, allegations

to the effect that the National

Security Agency had trapdoors placed in

a 1990s "international" verion of Lotus notes sold to

the German Ministry of Defence, the French Ministry of Education and

Research, and the Ministry of Education in Latvia.

You may also find, as I did, an allegation,

supported with a reference to disassembly results disseminated

at the Crypto 98 conference,

that the NSA had a hand in ADVAPI.DLL. (That DLL,

we are given to understand, sat within

the C:\Windows\system folder of late-1990s

Gatesmobiles.)

I said that availability of source code is a necessary condition of freedom. It is not a sufficient condition, since the programmer could make source code itself available under a restrictive EULA. In practice, though, where restrictive licenses get imposed, the source code itself gets withheld. That makes sense, since once you are in possession of the source code, the only possible remaining restrictions on your execution, your copying, your distribution, your analysis, your modification are of a juridical, as opposed to a technical, character: with the source code in your machine, no technical factors restrain you from running it through your own chosen editing or compiling tools.

The Free Software Foundation folks articulate the free-as-in-freedom philosophy at http://www.gnu.org.

On a strictly pragmatic level, a burgeoning literature, in part itself from the Free Software Foundation grouping, in part from thinkers outside that grouping, argues that closed-source, and thus by logical entailment unfree, software tends to have more bugs than its open-source counterparts. A celebrated presentation of this pragmatic line of analysis is Eric S. Raymond's 'The Cathedral and the Bazaar' (under the 'Essays' link at http://www.catb.org/~esr).

Pragmatic considerations cannot be ignored. But why, in unpragmatic, value-governed terms, must we reject unfree software? Suppose we concede for the sake of argument (what in reality we should not concede) that surrender of machine control is morally and politically licit. Deep reasons remain for rejecting unfree wares. If we countenance them, we erode the intellectual commons, thereby doing a disservice to humanity at large. Among other things, we insult the numerical majority of humanity living outside the rich post-industrial nations.

For, I argue, how are you supposed to administer your affairs as an English teacher on a provincial campus near Aswan High Dam, where you are lucky to have a desk and a desk lamp, where your students suffer schistosomiasis and vitamin deficiencies? Make arrangements with Redmond, Washington, for a "licence" before you are allowed to type up your lecture notes, compromising your dignity by arranging it as a corporate gift? By arranging to accept it as a piece of largesse ever-so-voluntarily bestowed on you, who are herewith, as part of the inner logic of the unforced charitable transaction, proclaimed poor and weak, a panhandler?

Well, some will say, let the licensee pay, as a dignified and autonomous agent in the global economy. But how does the typical English teacher, the typical history teacher, the typical anything teacher, live? The ones able to afford the bottled water and the latest copy of Newsweek and the legal boilerplate from One Microsoft Way, Redmond, WA 98052-6399, are a minority in the planet's population of teachers.

It is a sign of respect for humanity's intellectual commons that core data-processing software for astronomy (that's my own field) is either free-as-in-freedom or not too hopelessly far from free. Here in North America, people favour the Image Reduction and Analysis Facility (IRAF), from the USA's National Optical Astronomy Observatory. IRAF, although released under some restrictions, does make it into the not-appallingly-shackled "contrib" section (as distinct from the genuinely liberated "free" section) of Debian GNU/Linux. Some Continental astronomers, I gather, favour MIDAS, a tool made formally and incontestably free under the rigorous GNU General Public License by the European Southern Observatory. Heavy shackling of those two tools would hardly make sense, since taxpayers' money was spent on their development.

Incidentally, it would in a parallel way be objectionable for our universities, being in many cases taxpayer-funded, to put licensing restrictions on their course materials (the humble lecture notes, as distinct from the research-institute software). Open-society logic has been embraced at the Massachusetts Institute of Technology, where course notes get put onto public Web servers. Taxpayers in all jurisdictions should now be demanding such "open courseware" initiatives from their Ministries of Education.

The argument regarding the sanctity of the intellectual commons does have to be qualified a little. Specialized software may, as a matter of unhappy commercial necessity, have to be developed under licence. Physicists, even in the poorest countries, nowadays need not pocket calculators or computer spreadsheets, but symbolic computation packages. Those are tools of almost terrifying sophistication, that can (to take a modest example) evaluate the definite integral of the two-argument function y-times-square-root-of-sum-of-x-squared-and-y-squared over the half-disc of radius a with straight edge on the x axis, symmetrically placed with respect to the y axis, and get the correct symbolic result, a-to-the-fourth over 4. We're talking true mathematics here, not arithmetical number-crunching as we might program it with simple C++ or Java library routines. Such integrals are rendered all the worse by the need to nit-pick. Doing the computation myself, by hand, a few days ago, in rectangular coordinates, I naively assumed that for every real number z, the 3/2 power of z-squared is z cubed. Not so! If z is minus 2, the 3/2 power of z-squared is 8, whereas the cube of z is minus 8. The world has few symbolic computation packages. Mathematica exists, and I know is good, and I guess nice results can in many situations be had also from Maple, Matlab, and IDL. Were it not for the intellectual property rights so zealously guarded by EULAs, developers might have proved unable to make a living putting the tools together. So we have to resign ourselves to putting the tools under severe licensing restrictions even when they're going to that hardscrabble campus by Aswan High Dam. We even have to resign ourselves to the mind games customary in commerce, as when software that is first developed with all its functions intact subsequently gets degraded into a reduced-function version, ready for marketing at a "bargain price" to the mere full-time student.

Similar examples arise outside science,

in the trades.

Book publishers, for instance, farm out the writing of back-of-the-book

indexes to specially trained professional indexers,

some of whom now work in the poor countries. No such indexers

can nowadays be expected to use index cards or the grievously

misnamed "indexing modules" in word processing software. No:

whether you are in Oregon or in Uttar Pradesh, in Ontario

or in the Philippines, the only

way to do justice to the intellectual content of those

conceptually dense publisher page

proofs is to index with a professional indexing tool.

The world has few such tools. CINDEX exists, and I know is good, and I

guess nice results can be had also from Macrex and Sky Index. Here

again, were it not for the intellectual property rights so zealously

guarded by EULAs, we might have to fall back on radically less efficient

alternatives. (The problem of indexing books

was addressed in open-source terms in the 1980s by

distinguished Unix innovator Brian Kernighan,

whose awk scripts

remain available at

http://cm.bell-labs.com/cm/cs/who/bwk/. But

it is a far cry from his awk solution, or

again from the \makeindex provisions

of LaTeX, to the flexibility of CINDEX and its kin.)

Publishing,

farming, mining, fishing, manufacturing, medicine, law - you name

it, they all, even leaving aside the

enormous emerging demands of robotics,

may in any part of today's networked economy

request, in tones of pained embarrassment,

the crafting of unfree trade-specific software.

Not every tool, then, can be housed in the intellectual commons. But we must recognize closed-source software for what it is, an intermittently necessary evil. What we must not just courteously deplore, but outright work against with all the peaceful and law-abiding means at our disposal, are closed-source provisions for nonspecialized software - for, that is, the software embedded in our common and universal, in our topic-neutral, intellectual infrastructure. Restrictive licensing there - on operating systems, on Web browsers, on text editors - would be as inappropriate as EULA patrols on the TCP/IP protocol suite or on the ASCII character-encoding rules. Such protections call for the same lighthearted ridicule as all of us rightly direct at would-be privatizers of the world's water.

Yes, that Canadian film. Feeble though documentary cinema is as a tool for sociopolitical analysis, it was through that very piece of cinema that I learned the following fact regarding water privatization: commercial interests in Cochabamba, Bolivia, temporarily succeeded in making it illegal for private citizens to collect even rainwater. Since the content pushed at me in the darkened screening room, at a tempo that discouraged the taking of notes, was astonishing, I later checked the accompanying book. And yes, my recollection (confirmed also by a panel speaker at the University of Toronto Newman Centre on 2004 February 22) was accurate. Do as I did and look up 'Bolivia' in the index. The book is The Corporation: The Pathological Pursuit of Profit and Power. The author is Joel Bakan, who teaches in the Faculty of Law at the University of British Columbia. The publisher is Free Press. The year of publication is 2004. The ISBN is 0743247442.

(E) Next in our inventory of cyberspace superfluities, we have software which, be it free-as-in-freedom or shackled, addresses the needs of only the ordinary non-specialist, and yet fails to run on small-office software more than half a decade old. In 2004, we may a little arbitrarily define such hardware as the slow Pentium (the i586) with 48 MB or less of RAM.

Let's for a nanosecond belabour the obvious: we stress the biosphere by scrapping hardware, with its load of toxic residues, long before its lifetime is over. We all know, for instance from anecdotes in our local GNU/Linux User Group regarding long-lived hard drives, or again from our experience with keeping that valiant little early-nineties IBM PS/1 monitor running on a minor workstation in the Year of Grace 2004, that the natural lifetime for much hardware is on the order of fifteen years. We also all know, for instance from glancing at the Catholic Agency for Overseas Development (CAFOD) report at http://www.cafod.org.uk/, under what third-world working conditions shiny new hardware, so tempting as we gaze on it in the retailer's window, gets assembled. (In China, says CAFOD, you may be forced to wear a special red overcoat when the brigade leader finds a mistake in your soldering. Let's not even get started on the hours, the wages, the safety.)

What we really must, in the longer term, work toward is a "fair-trade hardware" movement, paralleling the existing fair-trade movements in tea and coffee. Hardware will count as fair-trade if the workers hold written contracts guaranteeing them at least local legal minimum wage for every hour worked, and are free to work only an eight-hour day and a forty-hour week, and are free to unionize, and are required as a formal condition of employment to have reached the local juridical adult-citizen age. These are themselves poor labour standards. We will in practice expect more, favouring those vendors whose hardware is produced in worker-owned cooperatives, democratically run along the lines of, say, the Scott Bader plastics group in Northamptonshire or the industrial appliance-assembly cooperatives in the Basque Country. We in the rich countries will pay a lot more for our hardware (to the point where we will be anxious enough to keep it running for fifteen years), and there will be fewer factory jobs available in the developing countries, and so perhaps all of us will be a lot poorer. But what the heck: either we value money, or we value truth and justice.

(F) And then we have software that, be it free-as-in-freedom or shackled, be it hardware-prodigal or hardware-frugal, induces its users to favour presentation over content. The January-February 1999 issue of the Jewish-and-interfaith Tikkun has an article from biologist David Ehrenfeld, under the intriguing title 'The Coming Collapse of the Age of Technology'. You can read it at http://garnet.acns.fsu.edu/~jstallin/complex/readings/Ehrenfeld.htm. Here's what Prof. Ehrenfeld has to say about content and presentation in Grade Six:

...on February 15, 1996, President Clinton launched his Technology Literacy Challenge, a $2 billion program which he hoped would put multimedia computers with fiber optic links in every classroom. 'More Americans in all walks of life will have more chances to live up to their dreams than in any other time in our nation's history,' said the president. He singled out a sixth-grade classroom in Concord, New Hampshire, where students were using Macintosh computers to produce a very attractive school newspaper. Selecting two editorials for special notice, he praised the teacher for 'the remarkable work he has done'. An article in New Jersey's Star Ledger of February 26, 1996 gave samples of the writing in those editorials. The editorial on rainforest destruction began: 'Why people cut them down?' The editorial about the president's fights with Congress said, 'Conflicts can be very frustrating. Though, you should try to stay away from conflicts. In the past there has been fights.'

And I'll add to this, without naming names, that I myself have worked on the thoroughly computerized task of textbook editing.

It is somewhat remarkable that a hurried publishing house should spend expensive time fussing over such minutiae as the boldfacing or the italicization of a semicolon in its Quark files.

It is still more remarkable that there should be fussing over hue and saturation in textbook images printed with four-colour web presses on fine coated stock, for a Canada whose impoverished schools may force students to share those glossy textbooks. (There are, say, thirty students in the classroom. But there are, say, twenty books to go around. A teacher in Nova Scotia gave me quite an earful on that situation lately.)

And it is very remarkable that, amid all this computer-enabled, computer-driven fuss over the way the textbook looks, the publishing house should show scant interest in its copy editor's warning that not all fish breathe with gills. Or again in the copy editor's warning that glass is not a crystalline solid.

Dear House: Although we speak of "crystal goblets", glass is strictly a liquid. Given time and pressure, it flows even at room temperature. You see, House, you really should invest five, even twenty, minutes in changing your example, perhaps adducing rock salt in place of glass, but at any rate by hook or ingenious rule-bending crook cleaning up the egregious mistake which somehow escaped the developmental editor and both the professor-consultants. Even if, production schedules being what they are, that now means that the final Quark file makes some semicolon inappropriately italic, or that the final Photoshop artistry makes the pretty lizard look too green.

Here I have respected my erstwhile client's privacy by changing details, even changing the scientific subject-matter a bit. The real problem was not with glass, but with something equally straightforward, that shall in the interest of confidentiality not be named here. But trust me, gentle reader. The Fault That Dare Not Speak Its Name was some gaffe that a teacher in tony Upper Canada College could correct in effortless eloquence, jeering the hapless House from the oaken lectern of a cozy and privately funded classroom. Some slip-up conversely doomed to stand, uncorrected, in the starved, struggling, crowded prefab teaching huts of Toronto's Parkdale, or of Canada's rural hinterlands.

(G) Finally - this, I'd say, is at the bottom of all our various cyberwoes - is the inappropriate drive to complexity. As our software bloats, accreting thousands and millions of lines of additional source code, adding on hundreds and thousands and hundreds of thousands of technician hours, our vulnerability to catastrophic failure increases.

For years, software engineers (on the whole a brilliant and diligent community) have found themselves in a dilemma recalling Joseph Tainter's Collapse of Complex Societies. In an analysis embracing among other things the fall of Rome, Tainter finds that newly complexified systems face subtle emergent threats, calling for still further investments in complexity. Those investments, he says, create still subtler security exposures, calling for yet more investment, until one day a sorry spiral of diminishing returns causes the whole top-heavy edifice to collapse into something a lot simpler. In Rome, he says, they kept adding legislation, currency manipulations, treaties with the Germanic tribes, more legislation. In the end, people got sick of it, finding rule by local, petty barbarian kings less nasty than the flavour of government on offer from the distant and ineffectual emperors.

There was a time when we had mere e-mail. Now we have e-mail with spam filtering. But (such is the logic of the spiral) existing spam filtering is not perfect. A significant part of our spam consequently now promises us not penis enlargement, as in days of yore, but freedom from spam. May we now anticipate spam that promises freedom from spam about spam, to be followed in due course by spam promising freedom from spam about spam about spam?

There was a time, before the widespread adoption of MIME, when we coped without e-mail attachments, using FTP on those relatively rare occasions when we had to ship a binary file, such as an image. Now we have attachments. They're a minor nuisance in the open-source GNU/Linux world (where we trash them immediately in most cases, and in case of real need first save them outside the mail-reading environment, then open them manually with some appropriately selected tool). They're a real peril in the closed-source world of Microsoft, where it is only too easy to "double-click" in that ever-so-complex graphical user interface, causing a maliciously crafted attachment - barriers to execution and propagation of malicious code are lower in Microsoft than in GNU/Linux - to deliver its payload. So in the Microsoft universe we have an expanding (that spiral again) anti-virus industry.

The industry was not small when I had my year in an information-technology department at Digital Equipment of Canada, from the summer of 1994 to the summer of 1995. Back then, the big fear was the boot-sector virus, transmitted through the exchange of unscanned floppies in the physical community.

Nowadays, with viruses and worms travelling in e-mail attachments, the security game is transformed. A couple of years ago, a rather senior Ontario public servant of my acquaintance described how her part of the service, perhaps as large as an entire Ministry, had to cut itself off from the e-mail universe for a couple of weeks as technicians purged the local systems of some celebrated worm or virus, perhaps Melissa. By then the cost to the world, in technician fees, legal fees, and lost productivity, from a single large infecter was according to BBC reports reaching or exceeding one thousand million American dollars.

And in the week I prepared the first draft of this essay (the final week of January in 2004) the Mydoom worm was making its rounds via Microsoft-executable e-mail attachments. The epidemic had perhaps subsided by the end of the week. At its peak, though, said the BBC, messages either containing the malicious binary or dealing with it were making up about 30 percent of world-wide e-mail traffic. British security house mi2g was around that point putting out a total remediation-cost estimate for Mydoom not of one thousand million American dollars, but of about twenty thousand million. An inflated estimate? It's in the long-term self-interest of such security analysts to make their figures accurate. Yet if in a perversely skeptical spirit we insist on slashing the twenty thousand million in half, we still have fully a tenth, or more, of what newspapers were presenting as the cost of cleanup in Lower Manhattan after the physical-infrastructure attack of 2001 September 11.

There was a time when recordkeeping on a personal computer was simple,

involving little more than plaintext files. I plan to argue elsewhere,

at length, that with the speed of even a slow Pentium and the

flexibility of simple GNU/Linux tools such

as vi, that's still the right way

to go for most applications. It's certainly appropriate if one has only

a few tens of thousands of records to manage, with only simple

report-generation requirements. (I have perhaps 800 or 1000 records in

my list matching people and institutions with phone numbers and e-mail

addresses. Although I retrieve individual entries from this list

many times a week,

I have just one formal "report" to generate, namely a set of

around 62 Christmas papermail addresses. That set I extract

from the master list with

a tiny script, comprising perhaps half a dozen lines of Perl. A few

people, perhaps particularly busy sales or journalism professionals,

are liable to have contact lists ten times as long as mine. In

engineering language, that's only a one-order-of-magnitude increase:

probably not enough to justify technology more elaborate than my own

Perl and vi.)

Now what is the market actually offering us? An invitation, of course, to climb Tainter's spiral. There is quite an industry in "Professional Information Management", encouraging us to entrust our precious addresses-and-phone-numbers lists to formal databases, such as are properly used by airlines and national police bureaux. I knew a guy who kept his contact list in such a management system. The inevitable happened: a file got corrupted, or something, and he lost access to the list. No doubt he could restore access upon consulting (the next twist in the spiral) one of the prestigious, expensive data-recovery houses.

As for management of phone numbers, so too for management of URLs. With the advent of Google - a technology with a point, since it really does reduce complexity in our offices - we have a much reduced need for keeping records of URLs. Still, it is occasionally necessary to note the odd recondite fact, such as that a certain Welsh eco-village keeps its pages at http://www.brithdirmawr.com/, or again that http://mha-net.org has pointers to environmentalist North American fieldstone-and-firebrick artisans. (The pointers would prove useful if one ever sought to build a fireplace in the thermally efficient Russian or German style, so as to burn Kyoto-friendly wood in place of fossil fuel.) Now how do we keep such facts? The simplest, and therefore the safest, procedure is to write the hard-to-Google-retrieve URL into a plain-text file, together with whatever concise or prolix free-format explanatory annotations we may need. But just as the computer industry offers us fancy addressbooks, so it offers us solutions for URL management, in the guise of bookmarks. And so (it's the spiral's iron logic) people read up on techniques for grouping bookmarks, for deleting bookmarks, for keeping bookmarks in all their delicate groupings undeleted through computer upgrades. Were there sufficient demand, we'd have consultants specializing in bookmark management.

Frillies, Tainter's Spiral, and Societal Collapse

If the flood of frillies simply made our offices complex, we could live with it. What's really scary, though, is the inherent instability of the situation, here as in Rome.

I've explored the scary possibilities for junk outside cyberspace, taking the perspective of the next half-dozen human generations, in my 'Utopia 2184: A Green Manifesto in the Traditions of the Permaculture and Catholic-Worker Movements'. That long essay is available in the 'Literary' section of my http://www.metascientia.com, with mirroring at http://www.interlog.com/~verbum/. To cut a long story short, my suggestion is that among the various woes facing us, it is the end of our cheap energy supply that will get us the really colourful press. We'll be remembered for that one in folk legend, as the Romans are remembered for their miseries at the hands of Goths and Vandals.

What, now, about junk within cyberspace? My gloom-and-doom scenario for the physical world covers many decades. For cyberspace, by contrast, I offer a scenario that could play out in the next two decades, or even sooner. It's a Rake's Progress, or Spiral, in five stages:

(1) The ordinary computer workplace (where we write the simple e-mails for our colleagues, the simple formal reports for our heads-of-Department) becomes more and more the domain of excessively sophisticated, frilly, file formats. In the early 1980s, it was at least thinkable that a manager or a prof could write a departmental report in plain ASCII. (Admittedly, even then primitive word-processors, notably WordStar, were on the scene, adding some non-ASCII mark-up to the plain text, for instance for bolding and italics.) By the late 1980s, a word-processor file was the usual thing. The format of those files inevitably evolved in the direction of increasing complexity. In the early stages of the iron spiral, we had contraptions like Word 3.0 and Word 95. Then it was Word 97 and Office 2000 and Thing 2003, with more and more provision for pretty pictures in our reports, plus fancy fonts, plus password locking, plus revision tracking, plus comment balloons, plus linking to external documents.

What's next? Multimedia, of course. So we will find ourselves producing ordinary reports and memoranda not with keyboard alone, but with webcam and microphone. We'll be inserting video clips even as we might now insert static clip art. It will be the same old content. For how many ways are there of telling a person where on the Web to find Defence Ministry annual reports for the Baltic and Scandinavian countries? Or what, apart from a simple histogram, is an appropriate presentation of the failure-voltage distribution for a population of 9000 nominally 6-volt incandescent bulbs in an engineering test that involves dialing the voltage up to final burnout? Or what, apart from a simple bulleted or numbered list, is a clear presentation of rose varieties suitable for the gardener in Timmins, Ontario? It cannot be that now, with the new presentation technologies, we become better foreign-policy analysts, better electrical engineers, better horticulturists than we were in 1980, much though the commercially entrenched software houses would like to foster that illusion. What changes is only that content gets packaged in increasingly glitzy ways, the 800-word memorandum now taking up not 5 kilobytes for its 5000 characters, but 20 kilobytes, 100, 200, 300 kilobytes, a megabyte, 10 megabytes.

I've twice performed the experiment of comparing the number of bytes in a plain-text file with the number consumed when the same content is packaged in a more or less current, more or less up-to-date, Microsoft Word format. On the first occasion, at some point in some such year as 1998 or 1999, a tiny memorandum bulked up by a factor of about 4. On the second occasion, in February of 2004, a roughly 50-doublespaced-page academic paper bulked up by a factor of just under 20 (19.36, if you really must know). No multimedia in either test: that treat lies ahead, should I prove masochistic.

(2) In charting the rise of elaborate formatting for the humble

memorandum or report, I mentioned password locking. We'll see a lot more

of that. The language of Digital Rights Management and Enterprise Rights

Management will gradually percolate down from the realms of high-level

information technology to the substantive managerial desk, as central

authorities give themselves more and more control, finding more and more

creative ways to use available security provisions.

Those provisions will keep ramifying

as the file formats become frillier. Soon it will be normal

for corporate head offices, and of

course governments, to possess a pair of powers. (a) They

will be able

to make all authorized copies of a given sophisticated-format

document unreadable. (One way this might work: If you are to read

the widget_memorandum.frillydoc file

on your workstation, your workstation

has to contact the Acme Widget head office for an authorization key.

So the Acme Widget people know that you, Jane B. Citizen,

at such-and-such a numerical IP address, at such-and-such a

time, were a reader. If, later, it is found expedient at the head

office to assert something inconsistent with

what for good or ill is asserted in

widget_memorandum.frillydoc, the

authorization key can become "accidentally corrupted", locking all

readers out.) (b) The central authorities

will be able to trace unauthorized copies of

of widget_memorandum.frillydoc, by

incorporating a digital watermark in

that ever-so-intricate file. So if an attorney were to present some

whistleblower's unauthorized copy

of widget_memorandum.frillydoc in

court, Acme Widget could inspect the unauthorized copy and work

out from which specific authorized copy the unauthorized

copy got made. Was the whistleblower Jane B. Citizen?

Or was it, rather, Prof. A. N. Other? They'll know.

Organs of state security in the late and unlamented USSR kept a registry of typewriters owned by the citizenry. Like so: Jane B. Citizen, out in Nizhny Novgorod, owns a machine with a small dent in the C. And in the serif of her P, there's a slight nick. Prof. A.N. Other's spare machine at his Moscow dacha has a perfect C, but an asymmetry, readily apparent to the high-power microscope, in the bowl of the P. The KGB spooks, then, could work out whether the person who typed up the latest confiscated copy of, say, the Universal Declaration of Human Rights was or was not the hapless Jane. The USSR was notorious for poor infotech engineering. And yet its spooks were scary enough. If our political culture does not change (if, that is, we turn a deaf ear to the pleas for participatory democracy and open administration so eloquently made by our Noam Chomskys, by our George Soroses), then we may expect really scary competence from infotechnology engineer-spooks within the Department of Homeland Security in the USA of 2010 or 2015.

Everyone knows the specific elements in the contemporary economy under whose aegis the organs of corporate and state security will acquire the malign pair of powers we've just identified. The industry consortium to look out for is the Trusted Computing Group (http://www.trustedcomputinggroup.org), an alliance of AMD, HP, IBM, Intel, and Microsoft. For details, you can check the ' "Trusted Computing" Frequently Asked Questions' essay by Ross Anderson, of late a Reader, now a Professor, in security at the Computer Laboratory in Cambridge, UK. There's a link to the FAQ from his homepage, at http://www.cl.cam.ac.uk/users/rja14/.

(3) We are thus destined to reach a stage in the Rake's Spiral

at which the long-familiar

post-September-11 media chill deepens. The awareness that

content in the style

of widget_memorandum.frillydoc can

be revoked when

convenient, and that unauthorized copies of such content can be tracked,

need never penetrate into society at large. It suffices that

activists, editors, and investigative reporters develop that awareness.

And how can they fail to develop it? One of the things that really

strikes me as I ponder my own principal professional association, a

grouping of several hundred Canadian freelance editors, is the extent

to which people there are knowledgeable about their word processors.

Where do you turn when you want to know all about comment balloons

in Word 2000, all about bugs in the XP

office suite? To Technical Support at Microsoft? Oh, please.

(4) Hand in hand with that rising awareness will come a rising

resentment, again perhaps not in society at large,

but certainly in the relevant professional circles.

We will in due course acquire an ill-tempered activist

underground, working to subvert

the widget_memorandum.frillydoc security controls,

rather as

dissidents used to subvert the USSR,

or - a markedly less happy example - as

readers of the 2600 magazine used to subvert

international phone networks.

On the one hand in this strident resistance will be the nobly impassioned civil libertarians, the Andrei Sakharovs and the Elena Bonners. But on the other hand will be the criminal elements, combining the irresponsibility of the 2600 phone-phreak culture with the ruthlessness of the post-communist Russian mafia.

Should you want to check some of this stuff out, you may find (I did) that the 2600 phone-phreak culture is as close as your nearest Chapters bookstore. Nowadays, the readership is talking about 'hacking the genome'. A recent 2600 article, on the magazine rack in February of 2004, makes on the one hand the point that we could in a moment of lamentable inadvertence do, um, something majorly bad to the biosphere, and on the other hand the point that the requisite skills can be had from a laboratory course at the local college. There's of course significant talk in those same vandalic, gothic columns about networked computing.

(5) Security controls do not, as a rule, last. We may expect, then,

the New World Order of widget_memorandum.frillydoc to

go the way of the late and unlamented USSR.

We may, further, expect this grim old law to hold

true, that the more ill-tempered the opposition, the worse the end

result. Not all revolutions are worthwhile.

Maybe we will be blessed with an opposition dominated by the

impassioned civil libertarians, leading the world to a mere

1989-style Velvet

Revolution. Maybe we will be cursed with a predominantly criminal

opposition, driving us into a ruckus more in the style of 1789.

What will it actually be like, if, all our efforts notwithstanding (I am a fan of the Velvet Revolutions, not of the Robespierres) there is some admixture of 1789? Perhaps it will feel like this:

One calm and sunny morning in 2019, you learn from

a BBC headline

in your Internet Explorer window of disruptions in the

Trusted Computing infrastructure. In this

case as in all cases, you take the precaution of

checking the BBC computing-and-civil-security reportage against

http://slashdot.org/.

(Slashdot: "News for Nerds. Stuff that matters.") What you read is

sufficiently unsettling to make you skip a second cup of coffee

and proceed immediately, as the diligent servant of your paymaster,

to your telecommuting workspace. The familiar

corporate window opens with its usual celerity.

But now half the documents in your workgroup list

are invisible. Anticipating plunging markets, you set up a

meeting with your broker. Her face on your screen looks tense.

Technical difficulties, she says, are preventing the brokerage

from executing any client sell orders this morning.

You try, nervously, to work.

A little before

lunch, your personalized enhanced-"security" news ticker

(a particularly sexy application

of *.frillydoc concepts) fails. At that instant you suspect

it was a mistake not to have raced to the banking machine

down the street upon first reading the BBC. As you hear a siren,

two sirens, a few

blocks away, you wonder for how many days or hours the basic

broadcast news

services, even the 911 lines,

will stay up.

And you find yourself applying to the entrenched

commercial software houses a

remark of John Ralston Saul's (as he is cited in Prof. David

Ehrenfeld's 'Coming Collapse' essay): 'Nothing seems more permanent

than a long-established government about to lose power, nothing more

invincible than a grand army on the morning of its annihilation.'

No-Frills GNU/Linux: A First Look

So much, then, for the Rake's Progressive Spiral of Frills. Clearly we must distance ourselves from the futile growth in software complexity and the eventual wrenching corollary to that growth. Clearly we do well to adapt to infotechnology an idea put forward in another context by Prof. Ehrenfeld: we do well now to develop a shadow infotechnology infrastructure, ready to serve people when things go suddenly bad.

I've argued in the advanced-readers appendix of 'Utopia 2184' that for the very long term, and to meet the very worst conceivable engineering scenario, we do well to develop amateur TCP/IP packet radio under the AX.25 protocol:

In packet radio, a Terminal Node Controller (TNC) connects the usual radio transmitting- and-receiving gear to a computer, in many cases a Linux box. The usual protocol for encoding data into packets for the TNC is AX.25. Awkwardly, the technology seems to be at its best with frequencies too high for ionospheric skip, limiting the range of direct communication to at best a few tens of kilometres. There are, of course, relay schemes. Further, I gather that some work has been done also in lower-frequency bands. Transmission speeds even in the highest-frequency bands are poor, one conceivable rate being a paltry 1200 bits per second. That's the speed of an entry-level or midrange home-computer modem around 1986: too slow for sound or graphics, although fine for bulletin boards, and I imagine fine both for text-only Web surfing (in the style of the lynx client) and for mails. I gather that AX.25 somehow makes it possible to exchange even TCP/IP packets, as we know and love them on the fiber-optic- backbone Internet, albeit at 1986 speeds.

We shall perhaps in a time of social breakdown find the conventional Internet silent, and yet also find rudimentary TCP/IP communications surviving on AX.25. Really dedicated ham operators could keep their sets running with photovoltaic panels, over periods of months and years, when more demanding communications institutions become damaged beyond straightforward repair. An AX.25 "Internet" would then serve as a bridging technology, helping the world restore conventional Internet services over the medium term.

Here, however, I'll focus on the medium term, only 10 or 20 years out, and on a less severe engineering scenario. I'll assume, that is, that we still have functioning public institutions, and in particular that the admixture of 1789 mayhem within the coming 1789-cum-1989 ruckus has been mild enough to leave us the capability of exchanging TCP/IP packets over the conventional satellite-and-fibre-and-copper Internet. What should we do today - even as, seeking to mitigate the horrors of the eventual electricity-grid crisis, we already today buy our compact fluorescent lightbulbs, already today keep notes on wind turbines and solar panels?

Clearly we must promote not just open-source computing, but a style of

open-source computing that minimizes our investment in complexity.

We'll politely tell people that we do not accept attachments in e-mail,

let alone attachments in the style

of widget_memorandum.frillydoc. We'll emphasize

content over presentation, formatting both our private

and our Web-mounted files as

either untagged ASCII or XML-tagged ASCII.

(The very Web-mounted page you are

reading is tagged in an XML application, namely XHTML.)

We'll have little to do with sounds or static images.

(We might, admittedly, listen to radio on the Web for entertainment.

Or we might

open the odd static image, needed for our

scholarly or scientific work, with a GNU/Linux tool such

as gimp

or eog.) We

will have very little indeed to do with Macromedia Flash,

or with Web movies, or with their progressively more intricate,

progressively more time-wasting, successors.

And then, as the high-end Internet dissolves into chaos, we will be happy enough. One of the beauties of XML is that the intellectual content continues to make sense if the (unavoidably intricate) machinery for processing the tagging breaks down. I have, for instance, an XHTML Web page that makes a few theological points. We can see what the page is about even if for some extraordinary reason we lack a functioning browser, and so are forced to view the file with an ASCII pager or ASCII editor:

<h3> In Which My Tutorial<br /> is Concluded<br /> by Sainte Thérèse of Lisieux,<br /> Who Discourses<br /> on Diamonds or Lozenges </h3> <p class="initial"> There follows a great whirling and swinging, Kent momentarily gone. The Saint with me at this instant really is, as I did request, she of the roses, the tough little Doctor of the Church. </p>

Here we can guess, probably, that

<br /> is

a code for a linebreak

and that Thérèse

is "Therese" written with French accents. Moreover, it is

entirely clear what the substantive content of the passage is -

something about Saint Theresa of Lisieux, appearing

to the narrator in a vision.

You may find my sermonizing more than mildly reminiscent of those sour and earnest exhortations to eat granola in place of hamburgers. But are such exhortations a bad thing? We know that the production of hamburger meat involves, on the farm, the squandering of antibiotics and fossil fuels; at the slaughterhouse, unspeakable animal terror; at the table, grease for sure, pathogenic bovine bacilli as a possibility. Once we've thought about it hard enough, we find it fun to decline the proffered bovineburger.

So, too, for no-frills, or as we might

also say, "Deep Green", computing.

Once we avoid

the *.frillydoc stuff, we find

there is not a lot we can do with our hardware. We can read

the news. We can read a little fiction, embellished with a few

modest graphical decorations (as when, say, we shiver

for the umpteenth time over a Web-uploaded

'Hound of the Baskervilles' with Victorian drawings).

We can hunker down and do our real

work, in our own particular branch of scholarship or science.

Music? Not on the workstation, or at least not often!

We turn a traditional

radio on at breakfast and dinner time,

and occasionally also make our own music,

say in a choir.

Nature films? We end up, instead, working with real plants or

interacting with real animals,

in real garden or woodland.

Leisure-time friends, as distinct from professional colleagues?

We look for them in face-to-face meetings, in our own

part of town.

Forsaking virtual realities, we acquire lives.

No-Frills GNU/Linux, Unix Permaculture,

and Noosphere Conservation

The personal-workstation philosophy that I'm urging here will resonate with fans of a 1998 essay, 'The Elements of Style: UNIX as Literature', by Thomas Scoville (available under the 'Essays' link at his http://www.thomasscoville.com/):

With UNIX, text - on the command line,

STDIN,STDOUT,STDERR- is the primary interface mechanism: UNIX system utilities are a sort of Lego construction set for word-smiths. Pipes and filters connect one utility to the next, text flows invisibly between. Working with a shell,awk/lexderivatives, or the utility set is literally a word dance.

And again:

You might argue that UNIX is as visually oriented as other OSs. Modern UNIX offerings certainly have their fair share of GUI-based OS interfaces. In practice though, the UNIX core subverts them; they end up serving UNIX's tradition of word culture, not replacing it. Take a look at the console of most UNIX workstations: half the windows you see are terminal emulators with command-line prompts or

vijobs running within.

Scoville here uses 'UNIX' in its old all-caps trademark style, echoing the specific work at Bell Labs and other commercial entities, who in various ways treated the word 'UNIX' as intellectual property. Nowadays, however, one thinks of unices: the old UNIX, under whatever formal auspices; the two old Digital Equipment Corporation offerings (firstly Ultrix, secondly OSF/1 - with OSF/1 itself in due course renamed Digital Unix); the old Sun Microsystems SunOS, and its contemporary descendant, Solaris; contemporary AIX at IBM, contemporary HP-UX at Hewlett-Packard; and of course above all BSD (the solid, respectable innards of the only outwardly GUI-fied, only outwardly effete, Mac OS X) and GNU/Linux.

The strength of the family of unices whose ethos Scoville so brilliantly captures is reflected in their underlying unity, even over three decades of development. What Scoville had to say in 1998 makes sense now, five or six years later. It made perfect sense in 1994, when Digital Unix was still current and Ultrix not quite defunct. It made reasonable sense in the "UNIX" culture of 1984, even of 1972. (1972! According to http://www.unix-systems.org/what_is_unix/history_timeline.html, system pioneers Dennis Ritchie and Ken Thompson were in June of that very good year writing, '...the number of UNIX installations has grown to 10, with more expected...')

How far back can we go? A little before even 1972. The gestation of the historical parade of unices can be traced to discussions at Bell Labs in the early (northern-hemisphere) spring of 1969. It's therefore logical enough that we should find unices tracking their time by counting the number of seconds elapsed since the start, at Greenwich, of New Year's Day, 1970.

And how far forward can we go? The present kernels, as we know them from, say, Debian GNU/Linux, may be conceivably be replaced over coming decades, say by something along the lines of the now-emerging Debian GNU/Hurd. But we may hazard the guess that something akin to the unices, with, as Scoville puts it, text the 'primary interface mechanism', will be around for a long time.

Activists ministering to the biosphere are nowadays inclined to stress the notion of "permaculture". In my 'Utopia 2184: A Green Manifesto in the Traditions of the Permaculture and Catholic-Worker Movements', I intimate, by way of subtext, that in discharging the duty of permaculturist concern that we owe to our biosphere, in building for the long term, we encounter a special joy. Specifically, my insinuation in that essay is that the joy is akin to, perhaps is actually a part of, the joy of the religious mystics.

With the biosphere, it is helpful to contrast the "noosphere". For present purposes, it suffices to characterize our noosphere loosely, as humanity's accumulated dynamic system of artistic and scientific texts, just as our biosphere may in a crude approximation be identified with Earth's accumulated biomass. Here are seven examples of things in the noosphere: (a) The poem on the Hallmark birthday card you just bought at the mall in Chicago or Toronto. (b) The French translation of that poem, which you would have found if you had done your shopping in a similar mall in Montréal. (c) A poem nowhere written down, but current in the oral tradition of some aboriginal tribe. (d) The actual language, with its intricate conjugations and declensions (or whatever), of that same tribe. (e) The software in your microwave oven. (f) The software, implementing pretty much the same abstract algorithm, but with a different structure of arrays, counters, and counter-incrementing loops, in your neighbour's oven. (g) The text in your calculus book, constituting, say, Prof. A.N. Other's specific presentation of the eternal, mind-independent verity that the tricky two-dimensional integral we encountered a while back really does evaluate to a-to-the-fourth over 4.

A somewhat subtle point arises for many of the creations in the noosphere, perhaps particularly for the final item in our sevenfold list. The mathematical fact that Prof. Other is expounding is independent of human mental activity, and so stands outside the noosphere rather as the Sun stands outside our biosphere. What stands within the biosphere, as a thing that came into existence through the action of a particular worker at a particular time, is, rather, the presentation. That's the thing Prof. Other concocts in, say, a mixture of the formal integral-calculus notation of the twentieth century (rather than of earlier centuries), and textbook-voice English (rather than, say, literary-voice French).

We have a duty of permaculturist, historically informed, concern toward the noosphere even as we have a duty of permaculturist concern toward the biosphere. We injure our biosphere when, for instance, we turn on a domestic appliance connected to a nuclear power plant, or again when we toss phosphate detergent suds into a drain that itself discharges into a lake. Analogously, we injure our noosphere when we write some lines of C or C++ for a socially pernicious product, say for a guns-and-gore video arcade game, or again when we launch some new ethnic joke into our city's oral tradition.

I'll finish this discussion by developing the suggestion that we discover a mystic joy not only in ministering to the health of the biosphere, but also in undertaking a ministry of permaculturist concern within the noosphere. As you'll see in a moment, my envisaged approach involves indulging only selectively and discerningly, in permaculturist spirit, in infotechnology innovation.

The biosphere-noosphere permaculturist parallel, incidentally, sheds some light on the otherwise puzzling fact that persons temperamentally drawn to gardening are often drawn also to editing, publishing, or writing. (Two eminent examples are the twentieth-century Sissinghurst horticulturists and wordsmiths Vita Sackville-West and Harold Nicholson.)

I'll develop my suggestion at some length, in story-with-screenshots form, describing what it might feel like to visit a workspace dedicated to the welfare of our noosphere. I'll present a fair mass of concrete technical detail, sketching lines of development that I may some day spell out in other essays on GNU/Linux workstation management.

For the most part, what I have to say reflects my own real-world practice. Most notably, I really do use over-the-desk pigeonholes, in a hutch which I commissioned from simple timber around 1984 and had a craftsperson enhance later in the 1980s. And of course I nowadays really do use Debian GNU/Linux. I must, however, remark that my living arrangements are too constrained to allow my walls to be literally lined with cartons and books (I apply Library of Congress formalism not to bulky items, but only to manilla folders and digital files), that my cyber-forensic capabilities are modest in the extreme, that the computing and book-parking arms of my U-shaped desk are one-third shorter than the arms described in this story, that I do not at present have the good fortune to reside in a formal community of scholar-scientists, and that I have scant money or time for interior decorating.

No-Grills GNU/Linux in the Noosphere:

Details from a Debian Implementation

This is a community of twenty or thirty workers. Some pursue careers in literature or in the literary trades (in the translating, or the editing, or the appraising and analyzing, of poetry and fiction). Some write technical manuals. Some are scientific expositors, whether at the popularizing or at the textbook level. Some create software. Some others specialize, rather, in selecting, configuring, and deploying it. Many work in substantive science.

The disposition of their accommodations and equipment recalls that special, relaxed concentration familiar to all of us when our current authorial, editorial, laboratory, or computational work is proceeding smoothly - our duty of the moment, whatever our particular line of intellectual labour may be. We thus notice with approbation the ecologically sound mixed-vegetation borders, the symbiotic combinations of flowers and vegetables, in the communal grounds. We notice also with approbation the simple character of the furniture and fittings indoors: the low-energy semiconducting devices for corridor illumination; the thermally efficient wood hearths for heating; the serene austerity of the decoration, with its terra cotta and fieldstone, its dried plants and wickerwork, in place of the alienating vinyls and polyesters of the Cold War era.

Now it is time to step into one particular workspace in this house of quiet concentration.

Our gaze is drawn first to the cubicle walls. On those capacious shelves, running from floor almost to ceiling, are nearly a thousand books; perhaps four thousand manilla folders for papers; a few hundred CD-ROMs; the tiniest scattering of video cassettes; and a few dozen other minor items, such as shelved cartons of geological, botanical, or archaeological specimens.

The classification-and-cataloguing formalism is itself a thing of delight, a meticulous adaptation of the Library of Congress formalism. Evidently a principle of economy of effort is at work here. It's an instance of the general principle, well known to programmers recycling code, that in place of reinventing the wheel, we should continually search out and imitate, occasionally improve upon, existing good practice. We recognize at once the Library of Congress formalism, so dominant in the best libraries of North America, namely the libraries (not of municipalities, but) of universities. (Why not imitate British or Continental practice? Although there may be excellence there, too, the Library of Congress and its derivatives in the campuses of North America are perhaps unique in the liberality of their funding, in the depth of their cataloguing documentation, and in the collection-to-collection uniformity of their classification decisions. Let those who hanker after the Old World compare the efficiency of the University of Toronto, both in its great Robarts and Gerstein repositories and in its dozens of branch-campus, departmental, and federated-college collections, with the archaic cataloguing muddle of the Oxbridge libraries. Much in Oxbridge is preferable to current Toronto academic practice. The librarianship, however, is not.)

We find on these shelves, then, law materials under K, education under L, even as we do on the vaster shelves of a good North American university library. In addition, as we descend from broad generalities to increasingly minute particulars, we find (here even as on campus) the science under Q, the astronomy under QB, the stellar spectroscopy under QB465.

These shelves, it is worth stressing, contain not books alone, but manilla document folders, CD-ROMs, and other materials, in each case correctly interpositioned with the books. So (to give one example of such interpositioning) we find manilla folders with offprints pertaining to the historical development of the Morgan-Keenan system in stellar spectroscopy duly placed near an old bound 1940s volume of stellar spectra.

Closer inspection reveals that the walls are lined in the Library of Congress style twice over, the A, B, ... , V, Z sequence of classes duplicated. On the one hand, we have a sequence for public documents - for instance, published books, or again photocopies of published print journals, or again printouts from public scientific-preprint servers in the arXiv family. Predictably, that sequence runs from the standard A, B, ... (encyclopedias, philosophy-psychology-religion, ...) all the way down to the standard V, Z (naval science, publishing-in-general). On the other hand, we have an A, B, ... , V, Z sequence for "private studies" - with, say, private reading notes on the Fortran programming language duly shelved under QA76.73.F25, private reading notes in agriculture duly shelved under S, a small carton of private specimens illustrating lowbush-blueberry tussock moth infestation likewise shelved under S. (This particular workspace has only twenty or so items in agriculture. That's a number too low to justify subdividing the S class. On the other hand, there is so much material on programming in this workspace that it really has proved necessary to split QA, 'Mathematics', into a few of its standard subclasses, most significantly at the level of the standard Library of Congress classes for individual programming languages.)

Close inspection also reveals a special, short, "maintenance" section, covering perhaps a mere half-metre of shelving. Here are kept the manuals, photocopies, CD-ROMs, and the like needed to run the equipment in the office. Among the folders of photocopies are a couple dedicated to the Library of Congress formalism itself, duly made in the reference room of a big campus library, on the strength of a five-minute consultation with a highly qualified reference librarian.

Finally, inspection of the shelves reveals a provision for the serving of "clients", whether commercial or pro bono publico. Here the regulating formalism is not the Library of Congress, but the classification system of a law firm: clients are ordered alphabetically, and for each client there is a chronologically ordered set of what could be called either "projects" or "matters".

We turn our attention now from walls to desk. It's a big desk, shaped like a flat-bottomed U, its short segment the size of a standard student desk, its two long segments more reminiscent of dining or boardroom tables. The short segment is the handwriting desk, dedicated to such delicate tasks as pencil-and-paper mathematics, or again the close reading of technical books. (That is the kind of reading undertaken with underlining graphite in hand, clean scrap paper at the ready, supplementary books also open as appropriate.) Hovering on stubby wooden pillars over this short desk, in other words over the U-bottom, is a great hutch with twenty-four pigeonholes, each capable of holding five or ten fifty-sheet manilla folders. The pigeonholes constitute a parking space for active files, temporarily removed from the wall shelving. On the underside of the hutch, a 40-watt fluorescent tube provides even and brilliant illumination for the writing surface, in a room otherwise maintained, as an electricity conservation measure, in dim half-light. (Not the prodigal ceiling lamp, but a hand-held astronomical flashlight, with light-emitting diodes, is the normal means of illumination when book spines and folder labels have to be inspected on the capacious shelves, at times of document retrieval and replacement.)

As the over-desk pigeonholes supply short-term parking for active manilla folders, so too does one of the two long arms of the U supply short-term parking for books. Here, arranged in two rows, each almost two metres long, are the dictionaries, software manuals, and scientific books needed for this month's principal studies or this month's principal client matters. (Or rather, such is the formal theory, a little optimistically written up in a filing-policy document in the workstation we shall shortly inspect! In practice, we shrewdly guess, the book-parking table tends to accumulate the books that are in rather constant use from one month to the next, no matter what studies or client ministrations may be in hand. For instance, it is a safe bet that in this particular work space Webster's Third New International Dictionary, and also Musciano and Kennedy's HTML & XHTML: The Definitive Guide, will be needed every few weeks.)

The other long arm of the U is the most exciting part of the desk. Here are kept the tower, monitor, and keyboard of the workstation, and also the printer. Here also are whatever other modest peripherals a deeply green workstation may legitimately require in this particular worker's specific branch of activity. (In addition to a printer, we glimpse a pair of low-grade loudspeakers. Although backup may well be performed with a humble combination of mirroring-to-slave-drive and writing-to-CD, we piously half-hope we see, half-fear we fail to see, a more elaborate backup solution, such as a tape drive. We are not sure whether, the lighting being what it is, we do or do not see a scanner. There clearly is a compact fluorescent lamp, its dark fourteen-watt glass coil ready to illuminate the peripherals should need arise.)

Theologians speak of the 'tranqillity of order'. The workstation is that apparatus in this space which is the tranquil fount of order. Let us, then, examine its design.

Evidently the ideal has been the occupying of that "sweet spot" which maximizes real intellectual productivity, by maximizing the worker's grasp and control of substantive subject-matter. If a workstation is made too simple, it becomes a mere typewriter. If it is made too complex, it ascends Tainter's spiral, turning itself into an ever-so-seductive hobby. Here, then, things are, in Einstein's phrase, made as simple as they can be, no simpler.

In terms of the history of computing, we find here selected throwbacks to the early-to-mid 1990s "golden age", midway between the archaic experimentation of the 1980s and the luxuriant decadence of the early 2000s. Static images can, when necessary, be edited. Audio, if heard at all, is not heard in a comfort that invites the worker to wallow in it all through the day. The Web can, of course, be browsed in many ways, with images or (we'll look at this carefully in a moment) without.

As a concession to

post-golden-age modernity,

facilities are installed for handling, cautiously,

even the most elaborate of

file formats. Some of those tools are of a mildly

forensic character:

if the worker is forced to receive a

foobar.frillydoc from outside, the

unwelcome incoming file is liable to get close scrutiny,

perhaps even at the level of certain individual bytes.

Although there are no black-hat operations here,

provision is made for certain

white-hat, in other words juridically and morally licit,

cyber investigations.

Old-fashioned as the workstation in many respects appears, it furnishes a hefty capability for organizing, creating, revising, and retrieving slabs of mathematical markup and natural language, the two foundational currencies of today's networked noosphere.

We thus find, paralleling the organization into private-study

and public documents on the walls, a hierarchy of private-study

and public digital materials, under

Library of Congress rules. Indeed it is the workstation

that in the following sense governs all the classifying

activity in this workspace, including the classifying of those

physical materials kept on wall shelving: whenever a book,

manilla folder, or other physical item is added to the collection,

a corresponding record is made in the workstation. Made how - by

adding an entry, perhaps, into a relational database? No. Since

this is a private library, with only some tens of thousands